There exists a classic example explaining the Modern Portfolio Theory (MPT) in finance. It goes thus: there are only two businesses on a small island, one selling umbrellas and the other sunglasses. On that island there are always, on average, 6 months of dry and wet season with profits of both companies depending on the weather. However, neither season is possible to predict in advance. Which company should you invest in? MPT says you should invest in both to get the same return but without any volatility.

Modern Portfolio Theory has often been criticized for being an extreme simplification of the real world. For example, the true impact of the weather might not be as clear (i.e. not all rain is equal). With consumer trend modeling, however, we could gain insight into the proper investment ratios.

In general, consumer trend modeling is an extremely powerful asset for businesses, risk managers, and investors. However, in today’s economy, waiting for large companies to release yearly reports will likely be already too late. Often, data is useful as long as it is fresh (or even better – real-time) and at least partly exclusive.

Collecting Data at Scale

Businesses have been collecting and producing data for as long as they have existed albeit some might not consider old-school pen & paper inventory and shipping logs as “data”. However, in the XXI century, the production and access of data have substantially increased, allowing us to draw conclusions previously unavailable.

In the era of Big Data, two primary drivers of the unbelievably quick production of information – “exhaust” data and consumer interactions. “Exhaust” data is information produced, essentially, as a byproduct of business activities. Whenever two people trade stocks on the New York Stock Exchange they go through a bureaucratic process, producing documents and other indicators of interaction. These documents become “exhaust” data as they accumulate over time.

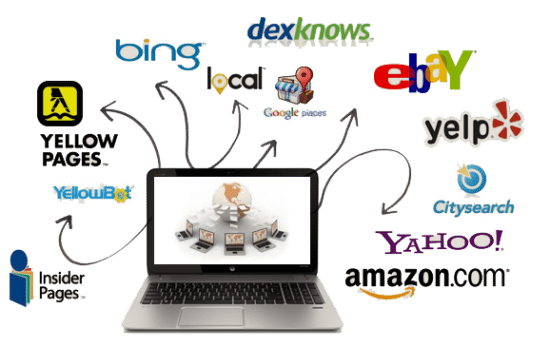

Another type of data spreading like wildfire are consumer interactions. Of course, every business understands the importance of customer reviews. However, most customers do not limit themselves with company-related websites.

While forums may have fallen to the wayside, there are still plenty of places where we all leave reviews, comments, and other important data. Nearly all consumer interactions can be considered as signals for movements within industries and markets. These interactions can also be considered as proxies for other indicators. Of course, the data acquired on consumer interactions should be of a large enough scale in order to avoid extreme variations in predictions.

Web Scraping for Large-Scale Data Collection

Normally, keeping up with the data being produced across the entire Internet would be an impossible undertaking. Of course, even with the best tools it still is, however, we can get pretty close. Especially, for data that might be relevant for businesses and we have proxy tools and solutions to thank for that.

With the advent of web scraping, collecting publicly available data from any corner of the internet is possible. Often, publicly accessible data that is gathered through automated tools is considered alternative data. A large proportion of our clients at Oxylabs either use proxies to support their in-house web data gathering processes or our dedicated web scraping tool for data acquisition related business activities.

Some businesses nowadays base their entire profit model on acquiring data and selling it as a service. It is no surprise seeing that the data industry is experiencing immense growth. Data from previous years projected that in 2020, that the entire alternative data industry would be worth $350 million dollars.

A lot of large-scale data collection involves web scraping. Web scraping, on the surface, is quite a simple process. An automated application goes through a set amount of web pages (or has a set amount and collects more URLs along the way), downloads the content, and stores it in the memory. Then the data is extracted and sent off to a team of analysts or stored for later use.

Of course, under the hood the process is tremendously more complex. However, for the purposes of understanding how “exhaust” data and consumer interaction might be utilized for predictive modeling such an explanation should suffice.

Consumer Trend Modeling

Consumer trends are often one of the best predictive signals for any possible movements within specific industries. Of course, there are global movements across industries, sectors (as we saw with COVID-19) but we are unlikely to glean a lot of useful information for such unusual circumstances from web scraping or exhaust data.

Of course, every modeling process has to have some hypothesis. For simplicity’s sake, let’s assume that global independent brand name mentions indicate consumer interest in a particular category of products. In this case, by independent mentions I mean that the content in question is not created as a campaign by a particular company (e.g. press releases and blog posts would not be considered as an indicator).

Both exhaust data and web scraping can bear fruitful results. Web scraping, in this case, is the simpler approach. Monitoring internet boards, social media, and other avenues of consumer interaction would provide an easy way of collecting brand mentions. In fact, there are tools out there that already do that (albeit often to a limited extent).

Exhaust data can be a little harder to track down. Generally, you would have to look for data-as-a-service (DaaS) that sell information on consumer action related to industry activities. Unfortunately, it’s impossible to say what exactly that data might be for every specific industry.

Collecting Alternative (or auxiliary) Data

Supporting conclusions derived from datasets with additional proof is a necessity. With big data, it’s easy to be led astray by the desire to find something useful. Without supporting evidence, we can’t truly be sure that the signals provided are accurate.

One of the most common ways to collect supporting evidence is to collect historical data on the same phenomenon. However, note that some occurrences in historical data may not necessarily repeat in the future. Yet, some information on trends and actions is always better than no information at all.

A great example of the power of auxiliary data to derive additional insights (or support conclusions) has been shown in a scientific paper by Johan Bollen and his colleagues. They collected large-scale Twitter feeds, used tools to automatically assign moods (e.g. Calm, Alert, Sure, Vital, Kind, and Happy), and correlate the mood to the Dow Jones Industrial Average (DJIA). In conclusion, they found that Twitter moods predict the up/down movements of DJIA with 86.7% accuracy.

While Twitter users are not “consumers” of the Dow Jones Industrial Average, we can see how certain signals can give us good predictions for certain economic incentives. Ecommerce businesses might look to web scraping and internal data collection to find signals for longer-term consumer trends in order to adapt efficiently.

Conclusion

External public data collection, if not already, will be a part of every digital business’s daily life. We are already seeing a trend towards the inclusion of data teams in various industries. According to data from alternativedata.org, the number of full-time employees in the data fields has grown by about 450% in the last 5 years.

Within eCommerce, data collection allows businesses to predict consumer trends, sentiment, and potential movements. Instead of relying on business acumen or internal data, eCommerce giants will be able to remove inefficiencies by following consumer trends.

The opinions expressed in this post belongs to the individual contributors and do not necessarily reflect the views of Information Security Buzz.