In the beginning we evolved a world of simplified computer systems, which were in most cases of the stand-alone variety. However, it was not too long before these simplified and isolated systems morphed into the worlds of local, and extended networks such as the LAN, WAN, and MAN, and then into the globalised infrastructures of fully blown architecture of wired interconnected systems, most of which are reliant on the open environment of that vast Super Highway – AKA the Internet.

As technology progressed with some of those mad-cap ideas and visions of an open world of trust, we soon saw the emergence of the Firewall, closely followed by the bastille effect of perimeter security devices in the form of IDS, IPS, and a whole manner of other protective, and content filtering technologies – recognising the need to reinforce the business perimeter, no matter where it was terminated, or for that presented. However, in a nutshell, we were very much about focusing on what we recognised as logical facts which were pertinent to the known profile.

As time progressed, the evolution of Security/Penetration Testing came to be accepted and common terminology, and it was soon the norm for anyone who was concerned about keeping their deployments free from breach, compromise, or unauthorised invasion the have such services run on a regular basis – but in my humble opinion this approach no longer goes far enough to underpin, what should be considered robust defences, as there is a lot that organisation may not know about their presented exploitable profile.

As some background information, I recently did an interview for Steve Gold of SC Magazine fame on the subject of taking a different approach to delivering the security mission. In that meeting I commented that there is now a need for more out-of-box lateral thinking on the Cyber Security strategy front, inferring that it is not necessarily about working with what you know, but was more about viewing the security threats against an IT resources in a different way. For example, as Neo did in the Matrix when he observed data in dimensional forms and alphanumeric streams. This to a large extent today is what I feel we need to work toward to deliver robust defenses in the modern age, to counter and mitigate the modern Cyber Threats by engaging the intrinsic value of Big/Deep Data, complimented with Big Thinking.

Now this brings me to an occasion where I was supporting a client with engaging/selecting the Professional Services of an established Penetration Testing Company. When I asked them what they felt should be included in the test-schedules, their response was ‘you tell us the IP range, and we will test it’. However, when I asked if it was possible to provide the client with a more informative approach, telling them what they didn’t know, and what should also be included in the test-schedules, the consultant was unable to articulate a meaningful response.

OK, so as most of us may agree, if the current statistics are to believed [and I believe them] with Cyber Crime running at is current successful level, it may be an assertion that the bad guys and girls are winning the current battle – and going by some of those big name organizations who have fallen victim, this only tends to further attest the facts.

Prior to moving on to the next part of this Blog, I do admit up front, I like many from the decades of imagination, and what we now refer to as hacking did dabble, and did learn some craft from hands-on experiences, devoid of any interest in policy, or compliance, but with a keen focus on just one thing – gaining access.

As we have progressed into the wider spectrum of interconnection, embellished by the complexity of protocols, systems, smart architectures, and complex code, these by inference have then introduce the need for underpin logical support and dependencies, which can, and do present the attacker, or for that the Security Tester with very rich pickings. All you have to do is look at that Matrix in dimensional forms and alphanumeric streams. In this article I am referring to this as OSINT, and an established process of a Security Triage to discover what you don’t know

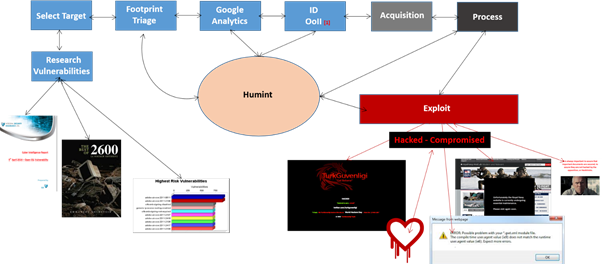

Fig 1 Triage Lifecycle

So let us consider the various components depicted in Fig 1 and understand just where they may fit into the overall plan:

Target Selection: This is the obvious input element – deciding what target, or targets are in scope, which may include the prime URL, IP addresses [Brand], or other associated parties [e.g., Third Parties or contracted Cloud].

Research: The fundamental underpin for any such operation is to have access to, and a call on resources, and research materials, ranging from current known vulnerabilities, zero-day reports, and long standing documented information out of the world of White, Grey, and Black Hat repositories such as 2600 for example.

Footprinting/Triage: This element is actually sticking the logical tentacles into the wider space of the interconnected world, seeking out whatever information is available on the target, and then documenting it as an early assessment to ascertain its potential value.

Google Analytics: The use of honed Google Analytics is a powerful tool which allows the researcher, or attacker to tight-profile the search criteria with advanced strings, cutting out the noise, and getting personal with what materials, objects, and data are being sought.

OoII: When we arrive at, what we refer to as the OoII [Objects-of-Intelligence-Interest] level, we are now in a position to identify what we wish to add into the overall pot of our target footprinting activity. This may include everything from Humint relationships [People], Technological finds [IP addresses, and Servers etc.], or Information Objects which may be carrying additional, and subliminal information which may further underpin the attack such as MetaData.

Acquisition: Having concluded the areas that fall under our OoII focus, we then acquire them, and bring them back for our on-line use – very much akin the acquisition of the Shadow Password File documented by Cliff Stoll in the Cuckoo’s Egg – it’s all about off-line passive analysis with the accommodation of time.

Process: At this stage we move to process our discoveries, allocate value, and place high-focus on the OoII of value to feed the next, and final stage of the Triage Lifecycle.

Exploit: The end-game of course is to turn any artifacts of discoveries into materials which may either be directly leveraged to carry out an exploitation of the target, or targets; or to act as indirect feeds to further the mission of incursion against our target.

The Results: OK, so all of this is very interesting [at least I am hopeful it is] but what does this mean in the real world? – Well consider all of this nonsensical, at times unrelated data, and the associated disjointed information, each of which component in isolation may have no real value. However, when you start to put the Matrix Jig-Saw together, you can start to get the Big Picture image which may be leveraged – just consider what such Triage approach has revealed in the past in the form identifying usable vectors of attack:

Example 1: Discovery of a UK Central Banks who did not realise they had suffered compromise by Chinese Attached Domains which were provisioning bio-directional flows for illicit information from their core of operations.

Example 2: Example 2 was the discovery of a significant exposures within the DNS profile, which in turn led to compromising internal, protected servers leading onto acquiring and compromising sensitive data objects.

Example 3: The next example is in the arena of Humint which targeted individuals with a Phishing Attack, based on leveraging Social Engineering, and here we were able to obtain logical information from one organisations Data Leakage, from which identified the users id, email address, and given that their telephone extension were included within their User ID, the exercise was able to place a direct call to that individual, and discuss the actual document the target user was working on.

Example 4: Out of leaked information, another large UK financial institution was leaking a massive amount of data on who was connected to the UK Government Backbone (GSX/Gsi). From these nuggets it was possible to identify the user names, GSX e-mail addresses to craft an e-mail, with a title ‘Re Our Meeting at the Government Event’ along with some appropriate text around the aspect of get back in touch. Within seconds, a return mail was received with even more information included in the signature footer, and thus was a yet another successful example of a Social Engineering attack.

At the end of the day it’s about applying the same techniques that the attacker and hackers do – leveraging the information that may have been forgotten, or disposed of in both the physical, and logical bin, and then piecing it together to add the attack value to accomplish a compromise, or breach.

Professor John Walker FMFSoc FBCS FRSA CITP CISM CRISC ITPC

Visiting Professor at the School of Science and Technology at Nottingham Trent University (NTU), Visiting Professor/Lecturer at the University of Slavonia[to 2015], CTO and Company, Director of CSIRT, Cyber Forensics, and Research at INTEGRAL SECURITY XASSURNCE Ltd, Practicing Expert Witness, ENISA CEI Listed Expert, Editorial Member of the Cyber Security Research Institute (CRSI), Fellow of the British Computer Society (BCS), Fellow of the Royal Society of the Arts, an Associate Researcher working on a Research Project with the University of Ontario, and a Member, and Advisor to the Forensic Science Society.

The opinions expressed in this post belongs to the individual contributors and do not necessarily reflect the views of Information Security Buzz.