People describe the Internet as a hostile network—which is true—and that got me thinking about other hostile environments where a successful strategy results in resiliency and continuity. What if Mother Nature was the CISO? What would her strategy be? What strategy could she give the prey species so they could survive in the presence of many predators? Albert Einstein was quoted as saying, “Look deep into nature, and then you will understand everything better.”

I’m specifically interested in prey species because, like most organizations on the Internet, they have no real offensive measures, yet they are expected to adapt to a hostile environment. It is the continuity of the business that matters most and such is the case with the continuity of the species.

The Patterns of Predator Species

To get a better understanding of the defensive tactics of prey species, it is worth spending a minute talking about the dominant strategies of the predators. The three that I’ll highlight are cruising, ambush, and the blend of these, which we will call cruising-ambush. All of these offer similarities to the threat landscape we have been experiencing on the Internet.

Cruising

Cruising is where the predator is continually on the move to locate prey. This strategy is effective when the prey is widely dispersed and somewhat stationary. This is a pattern we can see reflected when the adversary broadly scans the Internet for targets, and these targets are stationary in the sense that once a target is found, a connection can be made repeatedly. This was the dominant strategy for attackers in the early days of the Internet, mainly because it was all about compromising servers and “pushing” the exploit to the victim. While still prominent, it is noisy, and the predator, as in nature, must consider the consequence of being a victim him/herself while cruising.

Ambush

Ambush is where the predator will sit and wait. This strategy relies on the prey’s mobility to initiate encounters. Two factors account for a rise in the frequency of this pattern on the Internet: 1) cruising and attacking the stationary targets like servers got much more expensive with firewalls, IDS/IPS, etc., all increasing the protection of the prey and the detection of the predator; and 2) there has been explosive growth of mobile prey via browsers and clients in general. Another obvious fact to the predator is that there is way more client-prey than server-prey just by the ratio of client-server design. On the Internet today, we see this ambush pattern in a compromised web server sitting and waiting for prey to connect and “pull” down the exploits. The majority of malware is distributed in this ambush pattern.

Cruising-ambush

The blended cruising-ambush is by far the most effective predator pattern. The idea is to minimize exposure when cruising and employ effective ambush resources which in turn cruise, causing a loop in the pattern. A few threats exhibit this, such as a phishing campaign that broadly cruises for prey. Once the victim clicks on the phishing link, it quickly shifts to the ambush pattern, with a compromised web server sitting and waiting for the connection to then download the malware. This can continue again with that malware-infected host then cruising the internal networks looking for specific prey like DNS servers, file servers, yet another place to set up an ambush pattern, and so on.

The Patterns of Prey Species

Now that we know the predators’ strategy, let’s look at anti-predator patterns. There are many documented defensive patterns for prey species, and I’d like to explore the ones that can be applied to Internet security. In all of these cases, Mother Nature’s common pattern is one of making the prey marginally too expensive to identify and/or pursue. There is always a fundamental cost/benefit analysis performed by the predator; years of evolution ensure that the effort spent or the risks taken to acquire the prey are less than the calories offered by the prey when eaten.

Certain prey species have raised the cost of observation and orientation so much that they are operating outside their predators’ perceptive boundaries. Camouflage is one technique, and another is having parts of the organism be expendable, as in a gecko’s tail or a few bees in the colony. With the former, camouflage is another term for costly observation, and we can do this either with cryptography or in the random addressing within a massively large space like IPv6. For the latter, where parts are expendable—one can imagine a front-end system where there are 100 servers behind an Application Delivery Controller (ADC). When one of these servers is compromised, it communicates these specifics to the other 99 such that they can employ a countermeasure. The loss of the single system is an informational act and the system adapts or evolves as one species.

An effective countermeasure to cruising is the dispersion of targets or the frequent changing of non-stationary targets, raising the observation and orientation requirements of the predator. While in nature, we can measure cost/benefit by kcals spent versus eaten; the information space of the Internet must be measured in information and knowledge. If the predator has to do more probing and searching in the reconnaissance phase, it becomes more easily detected, so raising the cost of discovery in turn raises the predator’s risk of being discovered and caught during these early phases of the attack.

The last prey species pattern I find useful is one of tolerance to loss. Some species have found a way to divert the predator to eat the non-essential parts and have an enhanced ability to rapidly recover from the damage. Here, the loss is not fatal but informational. As we build highly tolerant information systems I think this is a very useful pattern; subsystems should be able to fail and this failure information be used as inputs to the system for recovery processes. In some cases, entire organisms die in order for the species to survive and evolve, so I’ll spend some time talking about these two very different logical levels and how they apply to Internet security strategies.

The Resiliency of the Species

The game of survival and resiliency is at the level of species and not at the level of organism. Diversity, redundancy, and a high rate of change at the organism level provide stability at the species level. Loss at the organism level may be fatal, but it is also information to the species level.

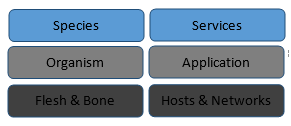

When we look at this pattern in information technology we can quickly see the need for abstractions. We can draw a parallel between server and organism and between service and species, for example. A web server farm of 10 servers (10 organisms) sits behind a load balancer that offers a service (the species). We can also see this pattern in the highly resilient DNS top level domain service, where the operating systems, locations, and even CPUs are diverse at the server level (the organism) but deliver the same service (species).

Abstractions are available to us in our design of these systems and we need to leverage them in the same way Mother Nature has over the past 3.8 billion years. Virtual servers, SDN (software-defined networking), virtual storage—all the parts are at our disposal to design highly resilient species (services).

By leveraging the virtual abstractions from the physical on the hosts and networks, we can build highly resilient services at the species level that co-evolve at the organism level as it adapts to the changing threat.

Conclusion

We are in an age where virtualization of endpoints and networks offers us the abstraction required to mimic these patterns in nature, yet we continue to design systems where loss is fatal and not just an informational event. Every organism in an ecosystem has a perceptive boundary—a natural limit to what it can observe and understand. It is their observation and orientation to the environment. It is on this plane that a hierarchy is naturally formed, and within it is the playground for survival.

Prey species have found a way to establish a knowledge margin with their environment, and this is what we must do with our information systems. The systems you protect must continuously change based on two drivers: 1) how long you think it will take your adversary to perform its reconnaissance, and 2) the detection of the adversary’s presence. Each time your systems change, the cost for the adversary to infiltrate and, most importantly, to remain hidden is raised substantially, and this is the dominant strategy found in nature. The patterns in nature are all about loops, so start to design with this in mind and you will live to compute another day.

TK Keanini, CTO at Lancope

Lancope, Inc. is a leading provider of network visibility and security intelligence to defend enterprises against today’s top threats. By collecting and analyzing NetFlow, IPFIX and other types of flow data, Lancope’s StealthWatch® System helps organizations quickly detect a wide range of attacks from APTs and DDoS to zero-day malware and insider threats. Through pervasive insight across distributed networks, including mobile, identity and application awareness, Lancope accelerates incident response, improves forensic investigations and reduces enterprise risk. Lancope’s security capabilities are continuously enhanced with threat intelligence from the StealthWatch Labs research team. For more information, visit www.lancope.com.

Lancope, Inc. is a leading provider of network visibility and security intelligence to defend enterprises against today’s top threats. By collecting and analyzing NetFlow, IPFIX and other types of flow data, Lancope’s StealthWatch® System helps organizations quickly detect a wide range of attacks from APTs and DDoS to zero-day malware and insider threats. Through pervasive insight across distributed networks, including mobile, identity and application awareness, Lancope accelerates incident response, improves forensic investigations and reduces enterprise risk. Lancope’s security capabilities are continuously enhanced with threat intelligence from the StealthWatch Labs research team. For more information, visit www.lancope.com.

The opinions expressed in this post belongs to the individual contributors and do not necessarily reflect the views of Information Security Buzz.